Introduction

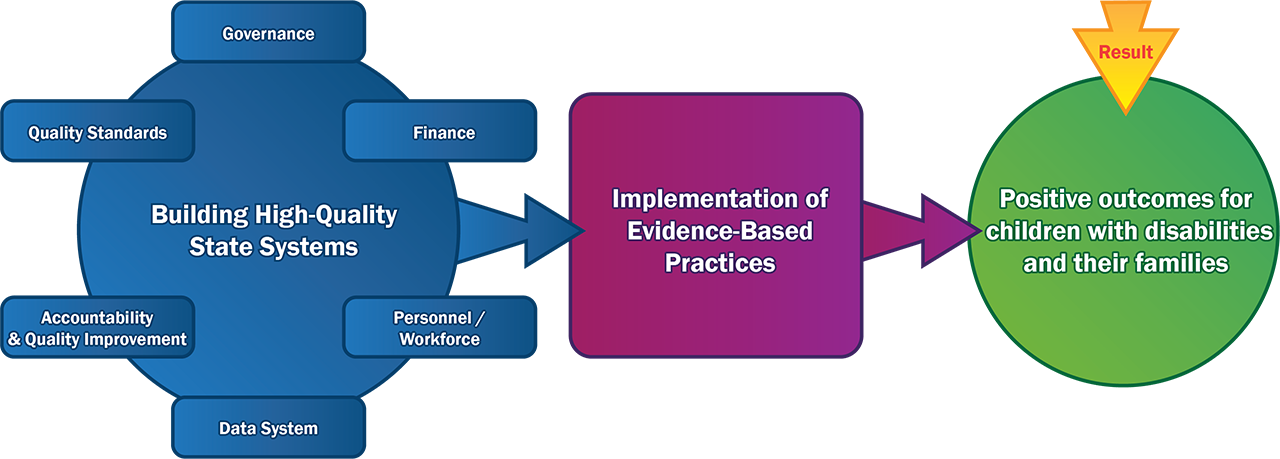

High-quality state and local systems support the implementation of evidence-based practices through policies, guidance, funding, professional development, coaching, and other activities. The implementation of these practices (referred to here as practice implementation) by practitioners working directly with children and families is expected to lead to improved child and family outcomes. This progression is shown in the theory of action below.

This tip sheet series is for evaluating the implementation of evidence-based practices (represented by the box in the middle of the theory of action). By evaluating practice implementation, states and local programs obtain vital information about (1) the effectiveness of the infrastructure and professional development activities intended to support practitioners in their implementation and (2) whether improved outcomes should be expected.

This tip sheet is designed to help state and local programs develop a clear understanding of what to measure when evaluating practice implementation. This understanding will create a strong foundation for planning and carrying out evaluation activities.

Evaluating Practice Implementation—Key Components

To design and conduct a high-quality evaluation of practice implementation, consider the following:

- Focus on measuring practices. Practices are the teachable, doable behaviors that practitioners exhibit when working with children and families. Through professional development, practitioners may increase their knowledge of and skills in a particular practice. Measuring participants’ knowledge, skills, and confidence is important for evaluating the quality of professional development, but it is not sufficient for evaluating practice implementation. If practitioners’ new capabilities do not produce consistent improvements in practices, the targeted child and family outcomes might not be achieved. High-quality evaluations measure practices, not just practitioner knowledge and skills.

- Clearly define and operationalize practices. Before selecting a tool to measure practice implementation, articulate the specific behaviors practitioners should exhibit to implement a practice with fidelity. Operationalizing a practice requires clearly and objectively describing the behaviors required to adequately implement the practice. To outline the components of a practice that are essential for acceptable implementation and to define how acceptable (and unacceptable) implementation of each component looks in practice, consider developing a Practice Profile (National Implementation Research Network, 2018).

- Assess practice change. Practice change refers to increases or decreases on measures of practice implementation across at least two time points. Incremental improvements in practice implementation can indicate that improvement strategies are working and highlight areas where practitioners need more support. Regular assessment of practice implementation allows programs and organizations to make adjustments in practice in a timely manner. Typically, practitioners demonstrate positive change in their practice before they reach full fidelity.

- Assess intervention fidelity. Intervention fidelity (referred to here as “fidelity”) indicates that a practitioner is implementing the evidence-based practice (or intervention) in such a way that developers intended so improvements in family and/or child outcomes can be expected. Key components of fidelity are adherence to the practice, quality of delivery, and dosage (i.e., the amount of the intervention delivered to children or families). Fidelity is measured by evaluating a practitioner’s implementation of the practice according to a set of criteria and then comparing the results with a predetermined level or fidelity threshold. (See Tip Sheet 3 for more on fidelity threshold.) Assessing fidelity is critical to understanding child and family outcomes, because when outcomes are achieved and fidelity is high the inference is that the practices are producing the desired outcomes.

- Assess implementation fidelity. Implementation fidelity is the degree to which strategies designed to support practice implementation, such as professional development, monitoring, and supervision, are delivered as intended and provides information that can help explain changes in intervention fidelity. This tip sheet series focuses primarily on evaluating intervention fidelity, but it is important also to collect information on whether the activities and supports designed to improve practices, such as training and coaching, occurred as intended.

Resources

- Data Decision-Making for Program-Wide Implementation. 2018. (ECTA, 2018).

- Considerations for Reaching and Maintaining Implementation/Practice Fidelity. (ECTA, 2018).

- Practice Profiles (Lesson 3). (National Implementation Research Network [NIRN], 2018).

The contents of this tool and guidance were developed under grants from the U.S. Department of Education, #H326P120002 and #H373Z120002. However, those contents do not necessarily represent the policy of the U.S. Department of Education, and you should not assume endorsement by the Federal Government. Project Officers: Meredith Miceli, Richelle Davis, and Julia Martin Eile.

Download Tip Sheet 1:

Download Tip Sheet 1: